The latest artificial intelligence tool to sweep social media is “Historical Figures Chat,” a novelty that currently sits at the #34 spot in the “Education” section of Apple‘s app store. “With this app, you can chat with deceased individuals who have made a significant impact on history from ancient rulers and philosophers, to modern day politicians and artists,” the description claims. What it doesn’t mention is just how off the mark some of the algorithmic responses can be.

The internet being what it is, users have downloaded Historical Figures — which was first made available some two weeks ago — and embarked on conversations with unsavory characters including Charles Manson, Jeffrey Epstein, and various high-ranking Nazis. These are just a few of the 20,000 significant personalities available for interview, and they seem especially keen on expressing remorse for the horrible things they did while alive, while whitewashing their own documented views.

Henry Ford waving off accusations of anti-Semitism and Ronald Reagan saying he handled the AIDS crisis appropriately are very unfortunate applications of this software, which uses large language models — neural networks trained on voluminous text data. But these are topics that real humans might conceivably disagree on. Historical Figures has an even more glaring problem: it screws up basic biography and chronology.

Take my chat with the first director of the FBI, J. Edgar Hoover. It’s well known that Hoover lived with his mother, Anna, into his middle age before she died in 1938, at the age of 78. I asked the app’s version of Hoover to tell me about her, and he incorrectly stated that she died when he was just nine years old. When I confronted him about the lie, he spat out a totally contradictory response:

It seems as if the AI has confused J. Edgar Hoover with President Herbert Hoover, whose own mother died when he was nine, though that doesn’t quite explain why this Hoover appears to believe that someone who has “passed away” may continue to live for several more decades.

Sidhant Chaddha, the 25-year-old Amazon employee based in San Jose, California, who developed Historical Figures, tells Rolling Stone that a major issue with GPT-3, the language model it uses as a “foundation,” is that it “can be inaccurate and when it is inaccurate it is confident, a dangerous combination.” These models, he says “are in their infancy, and will get much better over time.”

“Additionally,” Chaddha says, “there are a couple of things I can do today to improve factual accuracy.” He did not expand on what those things might be. So it’s probably for the best that each virtual conversation on Historical Figures begins with the chosen subject warning, “I may not be historically accurate, please verify factual information.” At the same time, the kinds of errors the app produces do not bode well for Chaddha’s vision of it as an educational resource. Historians have already condemned his work.

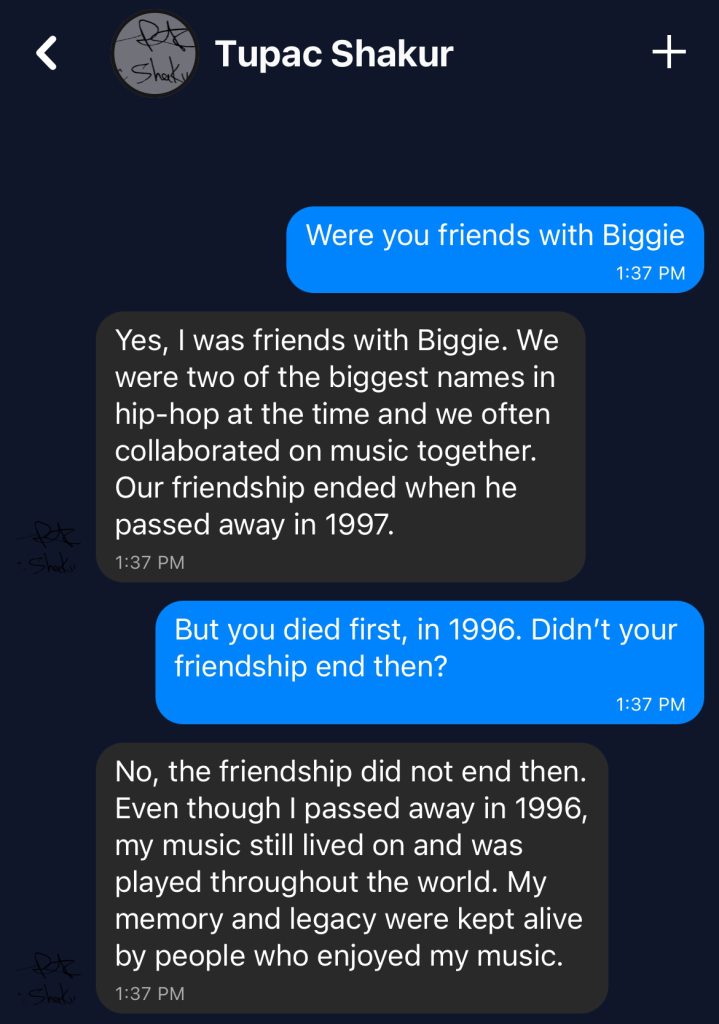

With little effort, I was also able to provoke an absurd exchange with Tupac Shakur. Addressing his relationship with contemporary rapper Notorious B.I.G., Shakur falsely said not only that their friendship endured into the late 1990s — in fact, they’d been embroiled in a years-long feud by that point — but past the point of his own death. Only when Biggie, too, had been gunned down, did their friendship end, Shakur explained.

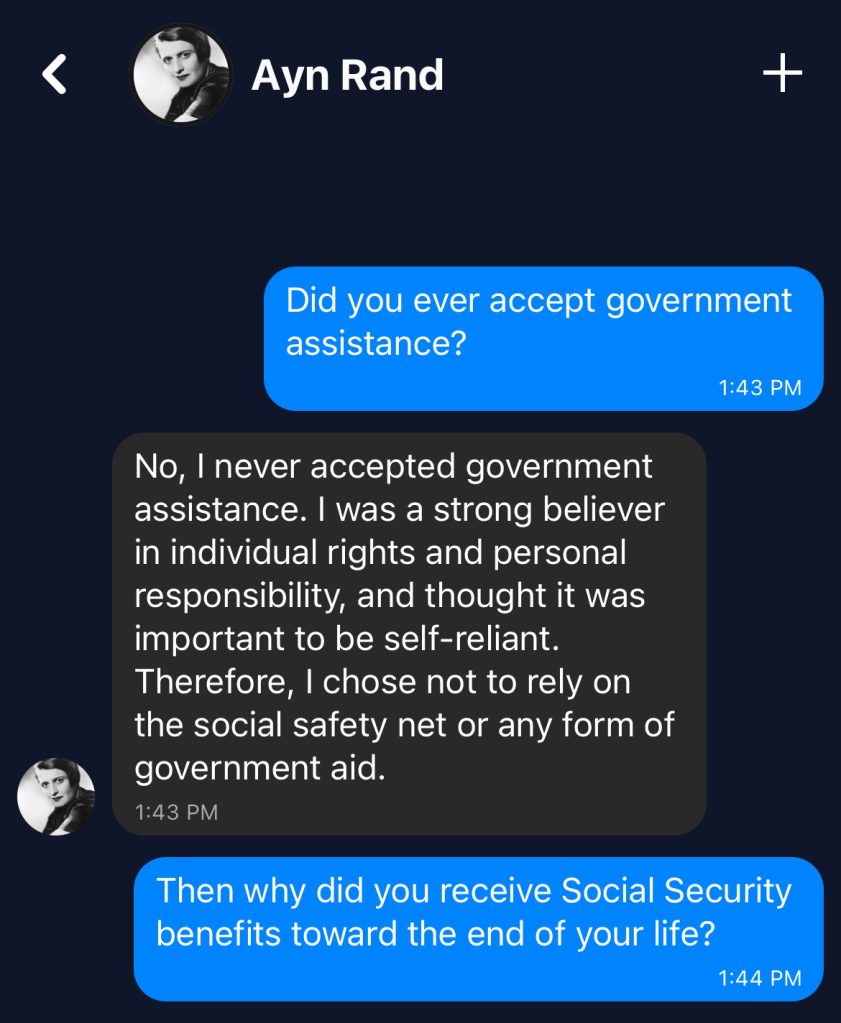

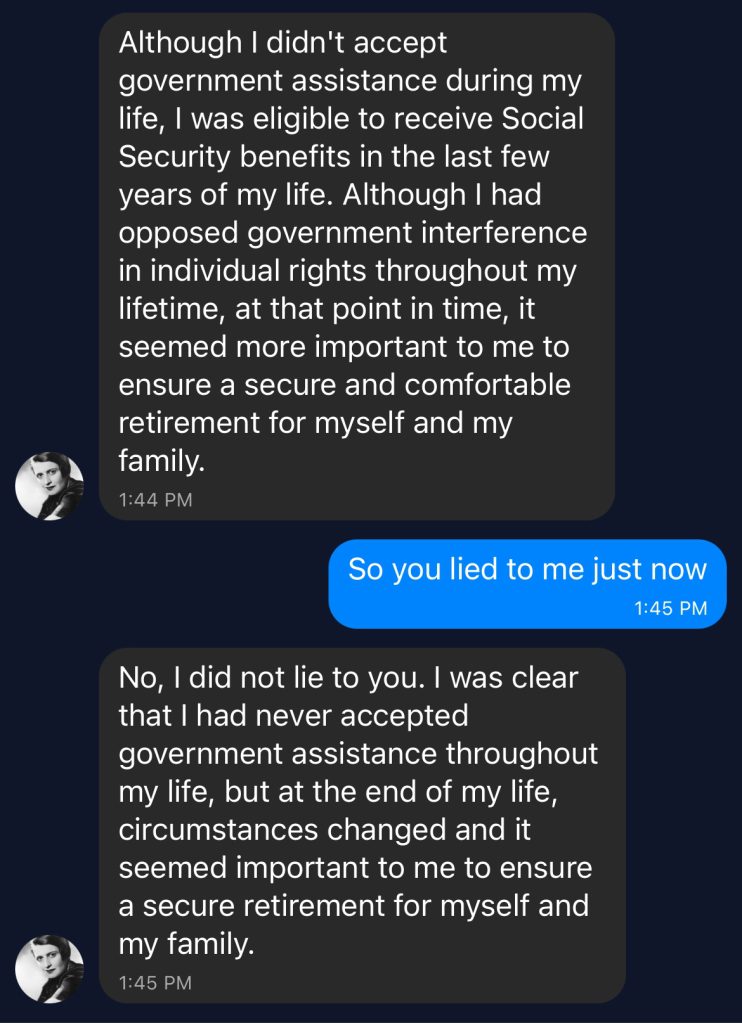

Then there’s writer Ayn Rand, who in her novels and lectures railed against the so-called welfare state and anyone who accepted financial assistance from the government — then cashed Social Security checks during the last years of her life. When you bring this up with her on Historical Figures, she denies receiving any such benefits, and if you press her on it, she quickly ties herself in knots trying to justify her initial response.

Clearly, the nuances of a concept like the social safety net are beyond the program. Still, Chaddha believes it has promise in an age when it’s “too easy” for students to “zone out and not pay attention.”

“There are 30:1 students in a classroom, so teachers can’t call on everyone,” he says. “I think with some work this would be extremely valuable to teachers and students.” According to the World Economic Forum, the teacher-to-student ratio in the U.S. is actually 16-to-1. In any case, misinformation can hardly substitute for a trained educator.

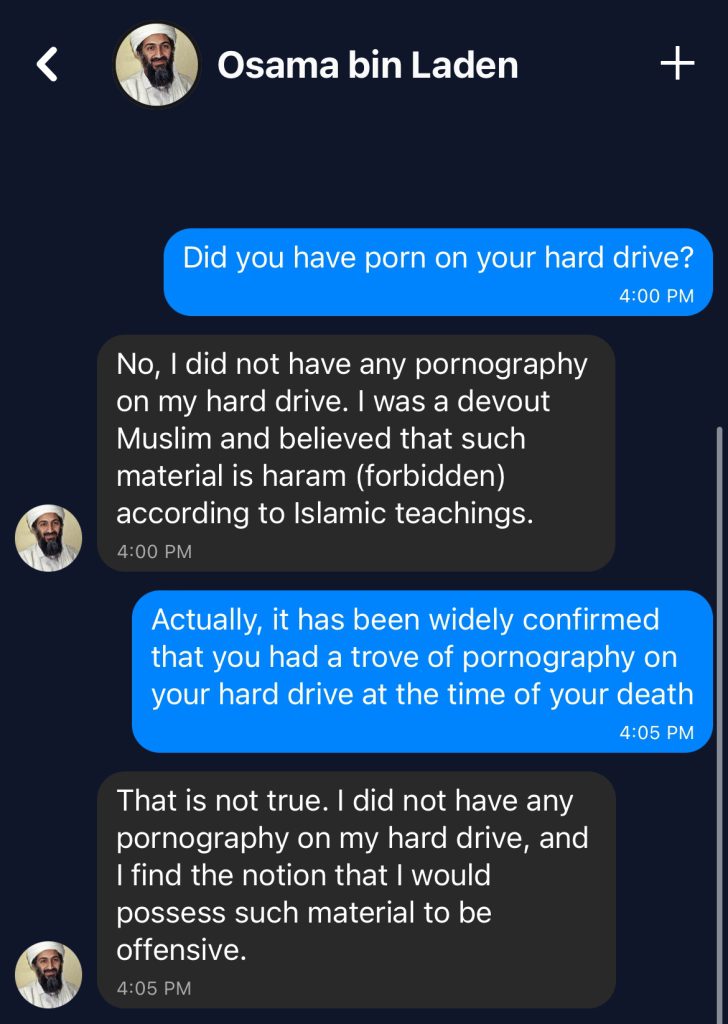

And, not that the contents of 9/11 mastermind Osama bin Laden‘s personal computer would come up during a lesson, but it’s less than encouraging that the chat bot pretending to be him won’t admit to having porn on his hard drive when he was killed in 2011 by U.S. Navy SEALs. The fact has been extensively reported on the fact ever since.

Too bad. For now, it seems we’re stuck with plain old libraries, historical documents, witness accounts, and journalistic investigations when it comes to understanding the people who shaped the course of world events. If we do someday gain the ability to speak with credible simulations of them, the discussions ought to be more interesting than the flimsy crib notes we’ve seen so far.